| |

CETL | Canvas | AI Guide | Course Design | Online | Software | Workshops

Learning Assessments

Evidence of Learning Evidence of Learning

Too often, the word "assessment" sends chills down the spine of faculty. Frequently, it conjures up images of overly complicated reporting procedures which reduce instructional efforts to data points that fail to reveal what really occurs in our learning environments. Further, faculty often feel like they are being judged. While it is true that good assessment yields useful information on the impact of our teaching, the real emphasis is—and ought to be—on learning: on seeing what works and what doesn't in a climate of trust. In order to do this, we need to shift our paradigm to one that values curiosity, experimentation, and innovation, and seeks to answer basic questions about cause and effect: If I try this, what happens? What is the impact of teaching on learning? How do we measure it?

To make assessment less daunting, let's think about what it is that we really want and need our students to be able to do in our classes in order for them to demonstrate that learning has occurred. Let's think about how we can set the stage for good assessment at critical intervals and in key documents such as in the syllabus, assignments and rubrics, and class discussions. Let's stay curious and learning-centered and think about how we can link teaching and learning. Then think about how to measure the impact of instructional innovations on learning gains.

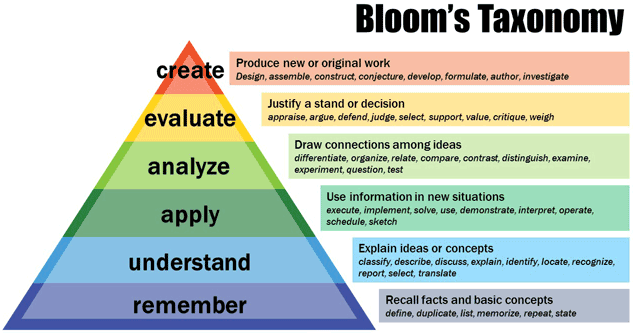

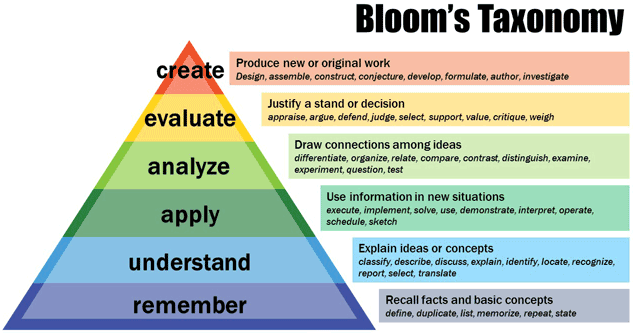

To help us out, we can look with confidence to Bloom's taxonomy pyramid which signifies the importance of the "lower level" thinking and learning skills and their relationship to and through what is often considered to be the epitome of learning—creating new knowledge. As a cautionary tale, don't rush up the pyramid. A lot of significant learning experiences occur along the way. Indeed, some of the best new research focuses on the pyramid's base, and how the brain must be made to work hard (reality check: teaching should be a fun challenge, and learnings should be hard) in order to recall, remember, apply, and develop knowledge. If you want to learn more about this, come on by the CETL office (Education Building, Suite 220) to talk, and while you're at it, pick up a copy of Make it Stick, The New Science of Learning, Learning Assessment Techniques, and a host of other books we can share with you.

Most of us have seen Bloom's Taxonomy Pyramid displayed above before, and many use it as a foundation for organizing and coding the kind of learning and work we want our students to do in our classes. That's all fine and good, but it can be a little "teacher-centered". If we shift our emphasis just a bit—if we put on a pair of learning-centered lenses—we begin to think more creatively about how we accomplish learning at each level. More importantly, this moves us away from simply stating (hypothetically) "I give students a multiple-choice test for them to show me they remembered these key principles" and moves us towards a greater integration of what we do in-class and outside of class to help our students develop and demonstrate a command of material. Assessment helps us think about this as a process, and therefore tends to rely appropriately on verbs.

Cover the right-hand side of the Bloom's Taxonomy Pyramid shown above and ask yourself:

| Where do the following terms fit in Bloom's Taxonomy Pyramid? |

Assemble

Critique

Diagram

Locate

Report |

Calculate

Defend

Distinguish

Measure

Reproduce |

Cite

Define

Explain

Persuade

Select

|

Classify

Demonstrate

Identify

Prioritize

Simulate |

Contrast

Design

Illustrate

Produce

Solve |

Criticize

Devise

Integrate

Recite

Summarize |

You will notice that certain verbs fall logically into certain tiers which helps us think about exercises and assignments we can use to gather evidence of significant learning experiences at all levels.

Bloom's Taxonomy Pyramid (from lowest to highest)

- Remembering: Think about the basic features of a thing or phenomenon.

define, identify, label, locate, list, match, quote, recall, recognize, recite

- Understanding: Think about how or why something works.

describe, explain, restate

- Applying: Think about applying a rule to a different situation, or in a different context.

apply, complete, illustrate, simulate

- Analyzing: Think about analyzing quantitative data.

compare, contrast, differentiate, interpret

- Evaluating: Think about measurement.

estimate, judge, prioritize, rate, score

- Creating: Think: inventive.

compose, construct, design, develop, formulate, hypothesize, invent, produce

So, how does this all come together in a class?

Great question. It is tempting—and in the right context entirely appropriate—to reference Angelo and Cross' Classroom Assessment Techniques and Barkley and Major's Learning Assessment Techniques. But often we need to fundamentally reassess—and potentially redesign—our courses. For that, please see CETL's Course (Re)Design and Student Learning Outcomes. Each, in different, but related ways, helps us develop significant learning experiences for our students.

Assessing Student Learning Outcomes

Tips for Effective Measurement

Methods and Instruments

When contemplating how to measure student learning outcomes, it may be helpful to think in terms of assessment methods and instruments.

Methods — types of tools used to assess the student learning outcomes. Do your students typically take exams? Would you get a clearer sense of whether they accomplished learning outcomes from a written assignment or oral presentation? Methods — types of tools used to assess the student learning outcomes. Do your students typically take exams? Would you get a clearer sense of whether they accomplished learning outcomes from a written assignment or oral presentation?

- Instruments — the actual assignments, quizzes, exams, and projects used to complete assessments. These are what you give to them and what they complete for you.

Once you have figured out the best general method of assessing your student learning outcomes (SLOs), you can develop or retrofit existing instruments to measure them. So, the first step is to determine the method you want to use and the second step is to develop the actual instrument.

Choosing assessment methods and developing assessment instruments

Consider the range of methods relevant to your discipline, course, and desired learning outcome. What are the general ways students reveal what they know or what they can do? Note that the most common assessment methods include:

- Tests — multiple choice, short answer, essay

- Formal writing assignments — research papers, reaction papers, creative writing assignments

- Performances — presentations, simulations, demonstrations

- Portfolios — organized, aggregate representations of student work

Once there is a decision on the method, it is time to shift to the instrument. Think about moving from a general idea to a specific, implementable representation of it.

Example: For a given class, it may be decided that the most effective method to measure student performance (related to a student learning outcome) is to have students do an oral presentation in class. "In-class presentation" is the general method, and the assessment instrument might be stated and measured as "an in-class oral presentation that requires the students to identify (or reveal) X, Y, and Z (certain specific types of information or skills)".

Tips for pulling it all together

- One way to balance meaningful results with time spent scoring is to use one assessment instrument to measure more than one outcome. This approach works especially well if you have both skill- and knowledge-based outcomes to assess.

Example: One assignment, three student learning outcomes…

- Information literacy

- Hypothesis formulation

- Data analysis

- Make sure the assignment or exam questions are directly aligned with the learning outcomes.

- Write and share clear directions. Articulate the expectations for completing the assignment.

- Pilot the instrument and ask for feedback from the students and faculty who used the instrument.

- Use rubrics. This is one of those endeavors that consume a lot of time up-front but yield substantial dividends later. The bonus is that it keeps the students and the instructor on the same page of the instructional and grading script. There are no surprises. Students see where the points are gained and lost, and grading goes rather quickly and easily.

- Always consider multiple means of representation, expression, engagement (Universal Design for Learning (UDL)).

- Always link your assignments to your syllabus. Think about the "what" and the "why" questions. Too often, we tell students what to do without explaining why. Or we have them do a bunch of stuff that does not appear to be related to the course description or the learning goals. Make your class make sense. In your syllabus, clearly identify your student learning outcomes, but also justify their value to the learning process. Why is this the evidence of learning you are looking for? Do the same for your assignments / assessments. Every assignment should represent an effort to generate evidence of the accomplishment of a learning goal.

Assess Learning in Different Context & Dimensions

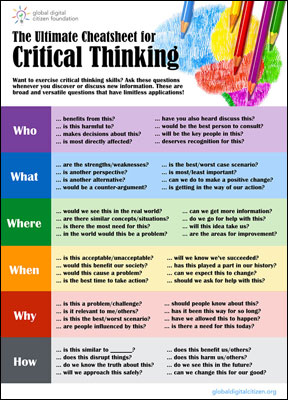

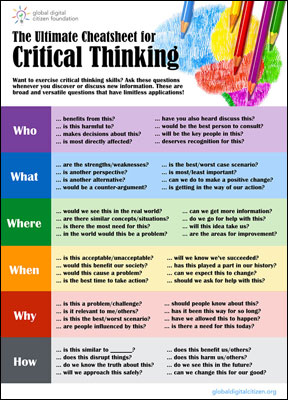

Critical Thinking, Critical Reflection, Metacognition, Civic Engagement, & Service Learning

Our primary goal as teachers is to enhance learning, but what evidence do we have and use to demonstrate that learning has occurred in our classes and through different learning experiences? How do we assess it, measure it, and then make improvements? How do we empower our students to become genuinely aware of their learning and to make the most of instructor feedback?

Listed below are resources to help you view the learning environment through the lenses of the students and, as faculty, to develop, adapt, or adopt strategies that engage learners and document learning—especially as pertains to critical thinking, reflection, and metacognition. Listed below are resources to help you view the learning environment through the lenses of the students and, as faculty, to develop, adapt, or adopt strategies that engage learners and document learning—especially as pertains to critical thinking, reflection, and metacognition.

Want to dive into the research on measuring learning? If so, check out the following:

- Carrell, S. E., & West, J. E. (2010). Does professor quality matter? Evidence from random assignment of students to professors. Journal of Political Economy, 118(3), 409-432.

- Clayson, D. E. (2009). Student evaluations of teaching: Are they related to what students learn? A meta-analysis and review of the literature. Journal of Marketing Education, 31(1), 16-30. SOURCE

- Johnson, V. E. (2003). Grade inflation: A crisis in higher education. New York: Springer-Verlag.

- Nilson, L. B. (2013). Measuring student learning to document faculty teaching effectiveness. Pp. 287-300 in J. E. Groccia and L. Cruz (Eds.), To Improve the Academy: Resources for Faculty, Instructional, and Organizational Development, Vol. 32. San Francisco: Jossey-Bass.

- Marks, M., Fairris, D., & Beleche, T. (2010, June 3). Do course evaluations reflect student learning? Evidence from a pre-test/post-test setting. Department of Economics, University of California, Riverside.

- Steiner, S., Holley, L. C., Gerdes, K., & Campbell, H. E. (2006). Evaluating teaching: Listening to students while acknowledging bias. Journal of Social Work Education, 42, 355-376.

- Weinberg, B. A., Fleisher, B. M., & Hashimoto, M. (2007). Evaluating methods of evaluating instruction: The case of higher education. (NBER Working Paper No. 12844.) SOURCE

- Weinberg, B. A., Hashimoto, M., & Fleisher, B. M. (2009). Evaluating teaching in higher education. Journal of Economic Education, 40(3), 227-261. SOURCE

RESOURCE MATERIALS

Of Syllabi and SLOs (slides) Of Syllabi and SLOs (slides)

The value and attributes of a good syllabus and ways to think about articulating and accomplishing student learning outcomes- Goals, Objectives, and Outcomes (pdf)

- Brown, Peter C. Make it Stick: The Science of Successful Learning. Belknap Press, 2014.

- Doyle, Terry, et al. New Science of Learning: How to Learn in Harmony with Your Brain. Stylus Publishing, 2013.

- Classroom Assessment Techniques (CATs) (pdf)

50 CATS by Angelo and Cross

- Elizabeth F. Barkley and Claire Howell Major Learning Assessment Techniques: A Handbook for College Faculty (Jossey-Bass, 2016).

|

Evidence of Learning

Evidence of Learning Methods — types of tools used to assess the student learning outcomes. Do your students typically take exams? Would you get a clearer sense of whether they accomplished learning outcomes from a written assignment or oral presentation?

Methods — types of tools used to assess the student learning outcomes. Do your students typically take exams? Would you get a clearer sense of whether they accomplished learning outcomes from a written assignment or oral presentation?