Technological Singularity

-- posted September 2015 --

The concept of technological singularity is explained in the following 12:16 minutes video:

https://www.youtube.com/watch?v=GplcPeh6JM0

The futurist Ray Kurzweil is probably the most well-known proponent of the concept, which is explained in the book The Singularity is Near, which I strongly recommend.

This term is related to the exponential advancement of technology, meaning that the acceleration with which technology advances is accelerating itself. For instance, for our first technological innovations, that is the wheel and stone tools, it took tens of thousands of years to evolve and to become widely used; then for the printing press it took about a hundred years to be widely adopted; for the computers, it took about twenty years for their pervasive use in our lives; for our latest technologies, e.g. smartphones, it takes only several years to be deployed worldwide. From another point of view, the extent of technological progress in the last 20 years will be achieved in the next 14 years from now, then it will be doubled in the next 7 years, and so on. The exponential rate of technological change will cause to experience one thousand times greater progress in the 21st century, when compared to the 20th century.

The progress will be more and more driven by convergence of different technologies, from the aspect that improvements in some scientific fields will drive the progress in other domains. For example, innovations in the field of nanotechnology will be reflected by advancements in medicine (cell regeneration, drug delivery to particular organs), computer technology (microchips, memory at atomic levels), etc.

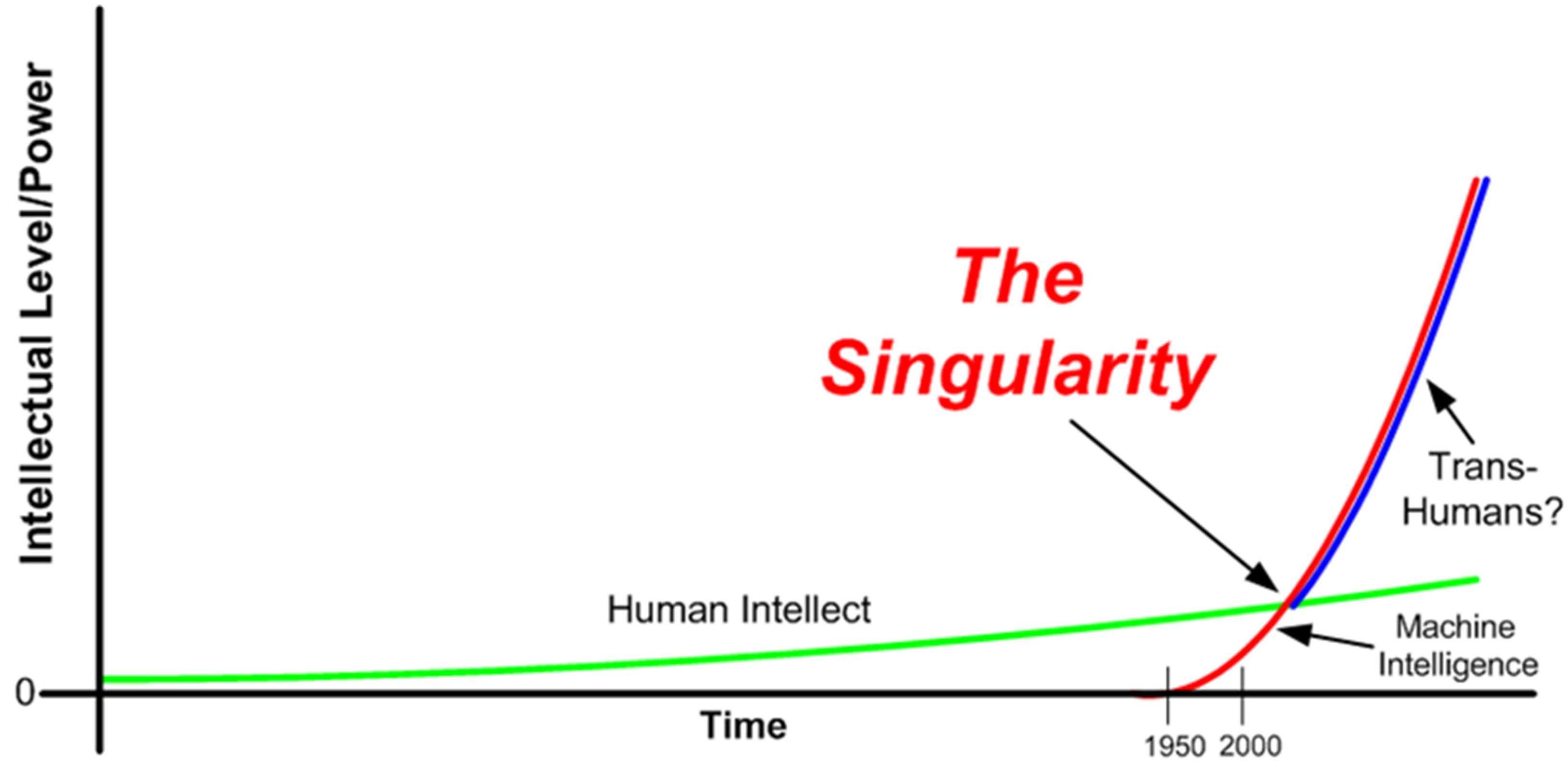

Kurzweil in the book predicts that by the end of the next decade we will possess the knowledge and computational resources to completely model and map the human brain, of which currently we know little. Subsequently, this will provide us with capabilities to design machines/computers that can emulate the human brain, and reason as humans. Moreover, in about 30 years from now, the machine intelligence will be so advanced, that it will greatly outperform our intelligence. The technological advancement by transhuman intelligence will be so rapid that it will create a point of irreversible state in the evolution - technological singularity. At that point, we are going to connect to the machines and utilize both our own intelligence and the artificial intelligence provided by the machines. The abilities of machines to store huge databases of information and access it immediately, their high-speed information processing (compared to the slow inter-neuronal connections of the human brain), and the capacity to instantly share knowledge, will significantly augment our brains and will overcome some of our biological limitations. The merger of our biological embodiments with our technology will be the consequence of the technological singularity.

That doesn’t mean that we won’t be humans anymore, it only means that we will ‘transcend’ our biological nature. Regarding the criticism that such a transition will de-humanize us, Kurzweil replies that it stems from the inability to comprehend the level of refinement that the artificial intelligence will achieve, which will vastly exceed the human levels of biological intelligence. Furthermore, the technological progress driven by the non-biological intelligence will be even more rapid, to such extent that it is impossible for us at the current state of our understanding to predict its implications.

There are many supporters of this idea (including myself), since the exponential advancement of technology is a fact and there isn’t any evidence that the accelerating progress is going to stop or slow down any time soon. On the other hand, there are critics who believe that machines would never be able to reach the level of human intelligence, because the machines cannot think and the programs that run their intelligence cannot capture the human mind and the intricate levels of hierarchy in the human reasoning.

My favorite thought of the narration in the video is that it is very difficult for us to predict the outcomes and the influence of the technological singularity on our lives, similarly as it would be very difficult for our ancestors - the apes, to predict our current level of technological development and our lives at the present time.

Quotes from the book The Singularity is Near:

Arthur Clark: “Any sufficiently advanced technology is indistinguishable from magic.”

Arthur Schopenhauer: “Everyone takes the limits of his own vision for the limits of the world.”

Michael Anissimov: “When the first transhuman intelligence is created and launches itself into recursive self-improvement, a fundamental discontinuity is likely to occur, the likes of which I can’t even begin to predict.”

Isaac Asimov: “I do not fear computers. I fear the lack

of them.”

Marshal McLuhan: “First we build the tools, then they build us.”

Eliezer Yudkowsky: “Our sole responsibility is to produce something smarter than we are; any problems beyond that are not ours to solve.”